GPT4

https://openai.com/research/gpt-4

对于GPT4的技术细节基本一无所知

2 Scope and Limitations of this Technical Report

This report focuses on the capabilities, limitations, and safety properties of GPT-4. GPT-4 is a Transformer-style model [39] pre-trained to predict the next token in a document, using both publicly available data (such as internet data) and data licensed from third-party providers. The model was then fine-tuned using Reinforcement Learning from Human Feedback (RLHF) [40]. Given both the competitive landscape and the safety implications of large-scale models like GPT-4, this report contains no further details about the architecture (including model size), hardware, training compute, dataset construction, training method, or similar.

GPT4 能看图了

在很多测试中,GPT-4 的分数都比 GPT3.5 高

GPT-4 会更多的语言

在之前课程中讨论了“模型越大效果越差的任务”,在GPT-4这得到了反转,GPT-4能正确应对这些任务。

之前课程指:【精准空降到 14:50】 https://www.bilibili.com/video/BV1TD4y137mP/?p=29&share_source=copy_web&vd_source=04259c9260832797bf08914e26e438d5&t=890

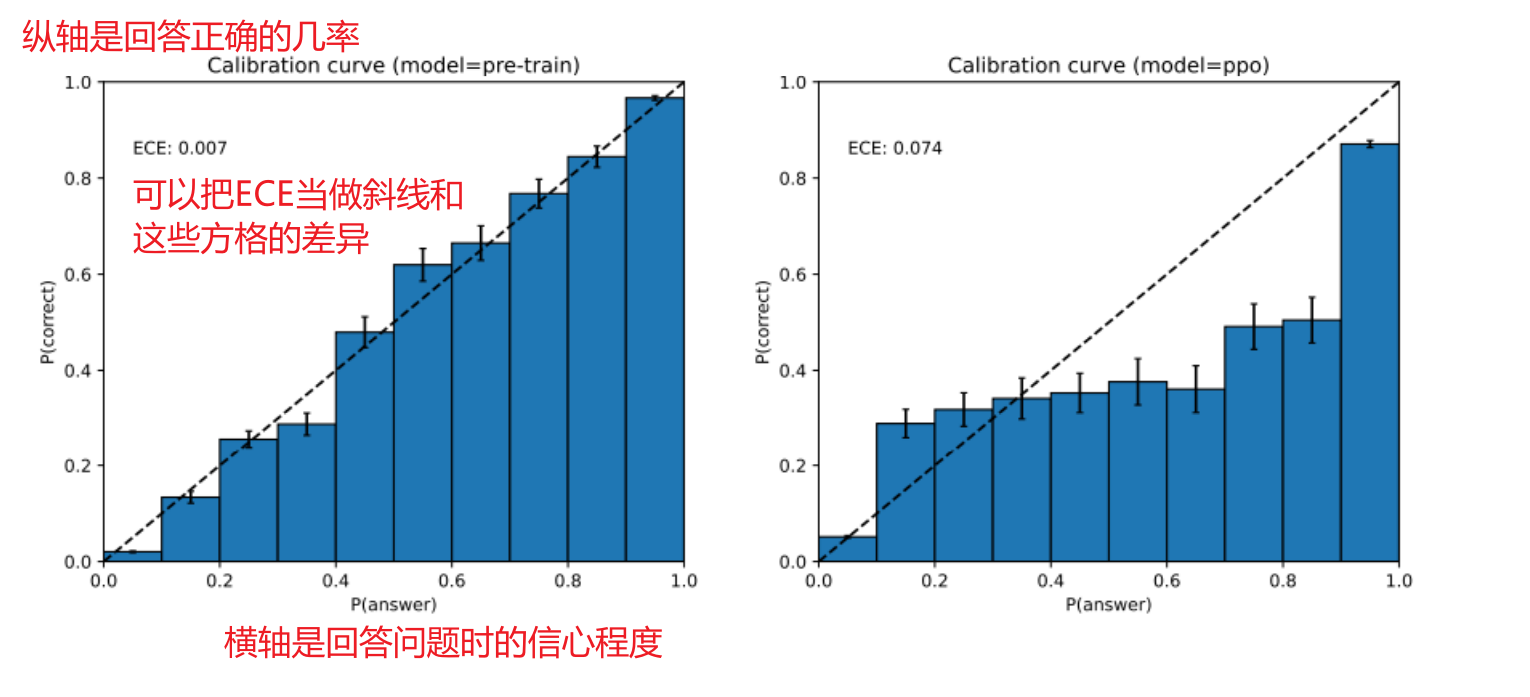

GPT-4 在 pre-train 时知道自己不知道,但跟人做互动、从人类老师学习后反而失去 Calibration 的能力

可能的方式:

将图像转成文字

Caption Generation

光学字元辨识 (OCR)

将图像转成向量:

Image Encoder

CLIP 离散化,然后每一个离散后的code用一个符号来表示,就把影像转成全新的语言

仿照Kosmos